Sandalone Kubernetes API Server using K3s Agentless

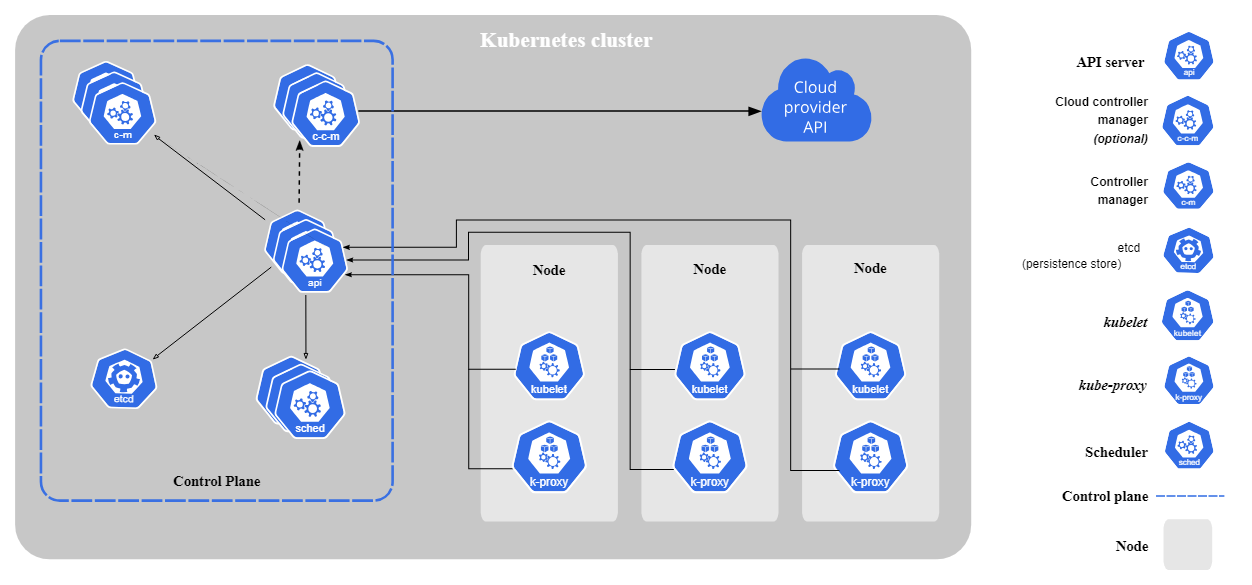

In most Kubernetes distributions, the nodes that make up the control plane are themselves agents running a Kubelet. In other words, they are capable of running pod and container workloads. This mechanism offers an interesting vision where the API server or the etcd base appear virtually as pods running on the Kubernetes cluster, while they themselves compose it. This is very practical, as the release management of the control plane can be carried out in the same way as the release management of the worker nodes.

However, this is not the architecture described to introduce the notions of Kubernetes. Take a look at the architecture diagram in the introduction of kubernetes.io. We can see that the notion of Kubelet (the pod/container manager) is completely separate from the control plane unit.

Indeed, there are several reasons why I'd like to reproduce this way of working:

- Disassociated Kubernetes control plane management of containers managed by Kubelet. Isolate it completely.

- Manage the Kubernetes API server like an API server. Equivalent to a web server.

- Reproduce managed control plane management like GCP, AWS or Azure with their own Kubernetes as a Service.

Of course, I could reproduce the same behavior by finely configuring the entire Kubernetes brick. For the same reason that people choose to integrate distributions, I'd like to be able to do so with a known distribution, which embeds its own ecosystem.

K3s

Agentless fonctionnality

I chose to go with K3s, because as mentioned in the introduction, most distributions don't offer this kind of mechanism. After some research, I even think that K3s is the only one to offer such an option. To be able to launch “Server” type nodes (Control Plane) without the Kubelet daemon.

What I really like about K3s is that the distribution is all-inclusive. There's just one binary to install, and then the whole ecosystem is packaged inside the binary. It's very pleasant on every level: updating, use, debugging.

On the documentation one interesting option is listed:

[...] When started with the

--disable-agent flag, servers do not run the kubelet, container runtime, or CNI. They do not register a Node resource in the cluster, and will not appear in kubectl get nodes output. [...]

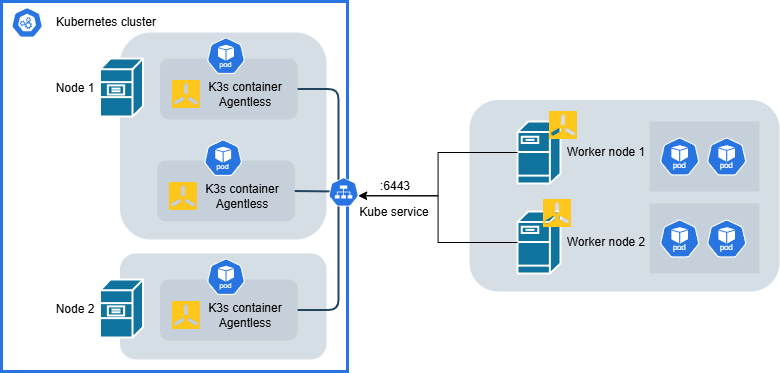

With this option, server node will not start with container orchestration. Only etcd avec API sever will be active. K3s is available as pre-package Docker container (hub.docker.com/r/rancher/k3s). Running this mode mean that the K3s container can even run without privilege!

The following docker-compose can create the agentless architecture:

(Please adapt the code for your environnemet. For exemple add a load balancer if require)

The command kubectl get nodes result with a single node listed:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k3s-agent-2 Ready <none> 11s v1.30.6+k3s1

If the worker node need to access Server Agentless behind NAT, please add the following paramter:

--node-external-ip: Specify the server external IP (NAT IP or Load balancer IP)--disable-apiserver-lb: Disable the internal worker node lb, worker node will contact only the--serverurl.

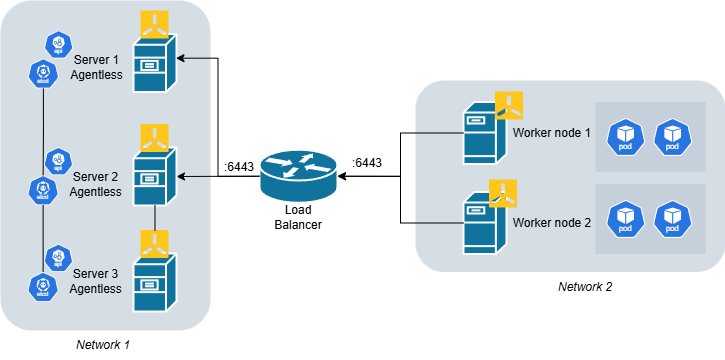

In the diagram, I've deliberately indicated that the node and server workers are on two different networks. The aim is for the server to be completely independent of the rest. The load balancer can be replaced by NAT or nothing. It's just a demonstration of possibility.

High availability version:

K3s in Kubernetes version:

To conclude, I've presented a solution based on K3s to manage the API server separately from the application workload. This architecture may be of interest if you need to separate API server management from worker lifecycle management.